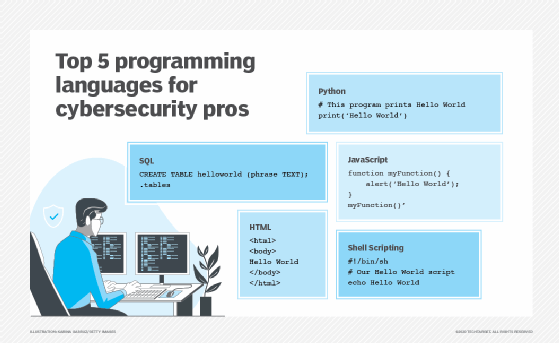

Python

What is Python?

Python is a high-level, general-purpose, interpreted object-oriented programming language. Similar to PERL, Python is a programming language popular among experienced C++ and Java programmers.

Working in Python, users can interpret statements in several operating systems, including UNIX-based systems, Mac OS, MS-DOS, OS/2 and various versions of Microsoft Windows 10 and Windows 11.

Python's origins and benefits

Python emerged three decades ago. Its inventor, Dutch programmer, Guido van Rossum, named it after his favorite comedy group at the time, Monty Python's Flying Circus. Since then,

it has attracted a vibrant community of enthusiasts who work on fixing potential bugs and extending capabilities of the code.

Python is known for being powerful, fast and for making programming more fun. Python coders can dynamically type variables without having to explain what the variable is supposed to be. Users can download Python at no cost and start learning to code with it right away. The source code is freely available and open for modification and reuse.

Python adoption is widespread because of its clear syntax and readability. Used often in data analytics, machine learning (ML) and web development, Python yields code that is easy to read, understand and learn. Python's indentation requirements for source statements help make the code consistent and easy to read. Applications developed with Python code tend to be smaller than software built with programming languages like Java. Programmers generally have to type less code.

Python programming also remains popular because the interpreter is excellent at discovering bugs and raising an exception. In this case, bad inputs never trigger a segmentation fault. As the debugger is Python-based, users won't have to worry about any potential conflicts.

Python continues to grow and is actively used by some of the largest multinationals and corporations that also support Python with guides, tutorials and resources.

Python use cases

Python offers dynamic data types, ready-made classes and interfaces to many system calls and libraries. Users can also extend it using another programming language like C or C++. Its high-level data structures, dynamic binding and dynamic typing make it one of the go-to programming languages for rapid application development.

Python also is often used as a glue or scripting language that seamlessly connects existing components. Users can use it for scripting in Microsoft's Active Server Page technology.

Primary use cases for Python include the following:

- ML

- server-side web development

- software development

- system scripting

Anyone who uses Facebook, Google, Instagram, Reddit, Spotify or YouTube has encountered Python code. Python code can also be found in the scoreboard system for the Melbourne (Australia) Cricket Ground. Z Object Publishing Environment, a popular web Application Server, is written in Python.

Python training and tools

As a result of extensive community support and a syntax that stresses readability, Python is relatively easy to learn. Some online courses offer to teach users Python programming in six weeks.

Python itself also provides modules and packages to learn and supports program modularity and code reuse. As users work with Python, they will want to be familiar with the current version, development environment and supporting tools, specifically the following:

- Python 3.0, which dates to 2008, remains the latest version. Unlike previous updates that concentrated on debugging earlier versions of Python, Python 3 had forward compatibility and coding style changes. As a result, Python 3 could not support previous releases. The code syntax narrowed in on code repetition and redundancy, allowing the code to tackle the same tasks in many different ways. This single change made it much easier for beginners to learn Python programming.

- Integrated Development and Learning Environment (IDLE) is the standard Python development environment. It enables access to the Python interactive mode through the Python shell window. Users can also use Python IDLE to create or edit existing Python source files by leveraging the file editor.

- PythonLauncher lets developers run Python scripts from the desktop. Simply select PythonLauncher as the default application to open any .py script by double-clicking on it through the Finder window. PythonLauncher offers many options to control how users launch Python scripts.

- Anaconda is a leading open source distribution for Python and R programming languages with over 300 built-in libraries specially developed for ML projects. Its primary objective is to simplify package management and deployment.

Python is a highly cost-effective solution when users add the free extensive standard library and Python interpreter into the mix. It is highly versatile. For example, users can quickly engage in edit-test-debugging cycles with no compilation step needed. For these and other reasons, software developers often prefer to code in Python and find that it helps increase their productivity.