marrakeshh - Fotolia

Cost to run EC2 instances vs. Docker containers

Fans of Docker claim it has many advantages for application deployment. But how does this translate in AWS? We do a trial run to compare costs.

As Docker excitement builds, advocates of the technology tout the benefits of running Docker containers on AWS. But to truly understand how Docker works in relation to Amazon EC2 instances, including cost comparisons and capabilities, it's helpful to do a trial run.

This experiment looks at the price of Docker containers under several configurations on a system with four applications, each of which requires 6 GB of memory. All four applications run, more or less, at the same time, though each app has usage spikes independent of one another.

Crunch the container cost numbers

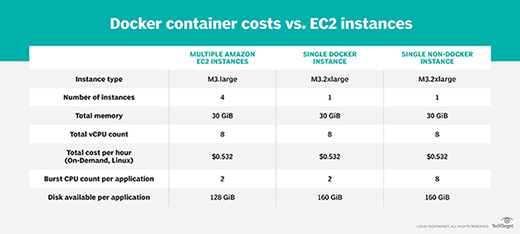

The table below shows how you could run those four instances several different ways. The first column describes a prototypical configuration with multiple EC2 instances; the second column describes a single large instance using Docker. And the third column describes that same large instance running without Docker.

Each application could run on an m3.large machine in a single-tenancy configuration. And all four applications could run on an m3.2xlarge, where that machine has four Docker instances. In addition, four machines could share resources and run natively on the same m3.2xlarge at the OS level.

Comparing Docker containers versus a non-Docker configuration on the larger machine, they are equivalent except for burst mode. The larger machine has eight CPUs, which, in the Docker configuration, are rigidly allocated; any app can use all eight CPUs as needed. It's unlikely that all four applications would have the exact same CPU requirements at the exact same time, so more CPUs would be available for applications in the non-Docker configuration.

A similar scenario exists when looking at disk storage. The larger machine has a total of 160 GiB of local disk, which, in Docker, must be rigidly shared. In a native configuration, each application can use a dynamic amount of disk. But one application might use more than its share of disk, leaving less disk space available for the other apps.

Docker hype vs. reality

So, if Docker containers aren't necessarily cheaper or more powerful than direct use of EC2 instances, then why do they get so much attention?

One area where Docker stands out is in deploying demonstration systems. When testing or doing a prototype deployment of a complex multi-application system, containers can be useful because they enable you to launch a single Docker image. In a prototype environment, configuration factors, such as long-term operating costs or effective use of resources, are less important than the need to deploy the system quickly.

On the other hand, AWS has specific technologies designed to ease the burden of deploying complex systems. Elastic Beanstalk, for example, creates a simple configuration to deploy a standard, complete web application.

Whatever your position on Docker, enterprise IT should watch this space carefully. Large companies, like Google and Microsoft, recently endorsed Docker-like technology. Others are sure to follow.